Introduction to Engineering Data Management

Engineering Data Management is a critical discipline that enables organizations to effectively acquire, store, organize, integrate, analyze, and maintain engineering data throughout its lifecycle. In engineering-intensive sectors (manufacturing, construction, aerospace, automotive, etc.), it serves as the backbone of successful projects, ensuring that engineers, designers, and decision-makers can access reliable information when they need it.

In practice, “engineering data” encompasses everything from CAD drawings and product designs to IoT sensor readings on industrial equipment, simulation results, maintenance logs, and beyond. Managing this breadth of data is no small feat – especially as the volume, velocity, and variety of data continue to grow exponentially.

To appreciate the challenge, consider the current data explosion: the volume of data generated worldwide is expected to soar to 185 zettabytes by 2025. Moreover, an estimated 80% of that data will be unstructured (documents, images, sensor streams, etc.), which is often the case for engineering data (think of complex 3D models, technical reports, or machine logs). A significant driver of this growth is the rise of connected devices – IoT applications alone are forecast to produce 90 zettabytes of data annually by 2025 – and much of this data is relevant to engineering workflows, from smart factory sensors to connected vehicles. The sheer scale and complexity mean that ad-hoc or manual approaches to handling engineering information are no longer tenable.

Our Vision

Sphere brings a holistic approach to engineering data management, recognizing that success requires more than just storage or analytics in isolation. It involves end-to-end coordination – from data aggregation and architecture assessment to business intelligence (BI) and AI/machine learning-powered data science. In other words, effective engineering data management spans the entire pipeline: how data is generated and captured, how it’s stored and governed, and how it’s ultimately used to drive decisions.

Modern Workflows for Engineering Data

It wouldn’t be surprising if we say that data is as crucial as the engineering talent itself. Virtually every step of modern workflows – from initial R&D and design to testing, manufacturing, and field operations – is either generating data or consuming it (often both). Managing this data effectively is therefore essential for multiple reasons:

Collaboration and Single Source of Truth

Engineering projects are often distributed across teams and geographies, involving multiple stakeholders (design, manufacturing, quality, suppliers, clients). Without a centralized, well-managed data repository, teams risk working on outdated or inconsistent information, leading to errors and costly rework.

A lack of organized design data (e.g. scattered CAD files) makes it difficult to track changes or know which version is current. Establishing a single source of truth ensures that everyone accesses the same up-to-date drawings, specifications, and performance data, improving collaboration. In fact, in a 2023 industry survey, 55% of companies with a formal data strategy cited improved internal collaboration as a top benefit. This means better alignment between departments – for example, design changes seamlessly propagate to manufacturing plans and maintenance documents, avoiding miscommunications.

Efficiency and Time Savings

Engineers and knowledge workers spend a surprising amount of time just searching for information. IDC data shows the average knowledge worker may spend about 2.5 hours per day (roughly 30% of the workday) searching for information they need. For highly paid engineering teams, this is an enormous productivity drain. When data is siloed or poorly organized, engineers might waste hours looking for the latest test report or manually piecing together data from different systems.

Streamlined data management tackles this inefficiency by making information easy to find, retrieve, and trust. The payoff is significant – less time chasing data and more time spent on value-added engineering work (design, analysis, innovation). Reducing “data hunting” time also accelerates project timelines, which is critical as businesses push to shorten development cycles.

Quality, Innovation and Decision-Making

Good data management directly impacts the quality of engineering outcomes. Decisions are only as good as the data behind them. Incomplete or erroneous data can lead to design flaws, safety issues, or suboptimal performance. In the construction industry, for example, “bad data” was found to be potentially responsible for 14% of avoidable rework, showing how data errors translate to real waste. Conversely, when data is well-governed and analyzed, organizations can unlock insights for innovation – identifying patterns in product performance, spotting field failure trends to improve the next design iteration, or using predictive analytics to optimize maintenance schedules.

Modern trends like digital twin simulations, AI-driven design optimization, and generative engineering all depend on having high-quality, well-managed data as fuel. C-level leaders recognize that being “data-driven” is a competitive advantage: one study found over 90% of companies are prioritizing data initiatives for precisely this reason. In engineering contexts, this translates to faster problem-solving and more confident decision-making based on evidence rather than guesswork.

Modern Technologies and Industry 4.0

The push toward Industry 4.0 and smart, connected operations has elevated the importance of data management even further. Engineering firms are increasingly moving from on-premise systems to cloud platforms, driven by the need for scalability, improved accessibility, and real-time collaboration.

Cloud-based data environments allow geographically dispersed teams to work on the same datasets and models concurrently, and scale up to handle big data from IoT devices or simulations. The integration of IoT in engineering projects is making them more data-driven than ever – real-time sensor feeds, telemetry, and operational data need to be managed and fed into analytics pipelines. For instance, as IoT adoption increases, engineering projects become more responsive and resilient thanks to continuous data insights. However, this also means workflows now include streaming data ingestion, edge processing, and large-scale data storage, all of which require robust management. Additionally, emerging tech such as AR/VR for design reviews or blockchain for secure data exchange are entering engineering workflows.

These promise great benefits (e.g. immersive collaboration, tamper-proof records), but only if the underlying data is managed in a way that ensures integrity and accessibility. In short, innovations like AI, machine learning, and digital twins in engineering are only as effective as the data pipeline supporting them – underscoring the importance of a strong data management foundation.

Compliance, Security and Risk Management

Engineering projects often face stringent compliance requirements (for safety, regulatory standards, or client contracts). Proper data management helps enforce these by maintaining thorough records, version histories, and traceability (who did what, when). It also guards intellectual property – engineering data like CAD models, formulas, or test data are valuable IP that need protection. The explosion of data has unfortunately expanded the cybersecurity threat surface in engineering: a recent study noted 59% of AEC (architecture, engineering, construction) firms experienced a cybersecurity threat in the past two years.

Data breaches or loss can be devastating – imagine a competitor obtaining proprietary designs or a ransomware attack freezing critical project data. Thus, secure data management (access controls, encryption, backups) is not just an IT concern but a board-level concern. It’s tied to maintaining client trust and meeting legal obligations in industries where client data or safety-critical data is handled

Given these factors, it’s clear why C-level executives are paying attention. Engineering data management directly affects operational efficiency, product quality, time-to-market, innovation capacity, and risk exposure – all metrics that hit the bottom line. However, many organizations are still struggling to get it right. More than half of industrial companies do not have a professional data strategy in place for their engineering and project data.

The message is clear – streamlined engineering data management is no longer a nice-to-have, but a mission-critical component of modern engineering workflows.

Engineering Data Shouldn’t Be a Maze!

Scattered files, version confusion, and siloed systems slow your team down. Centralize your data, eliminate errors, and give engineers what they need—fast.

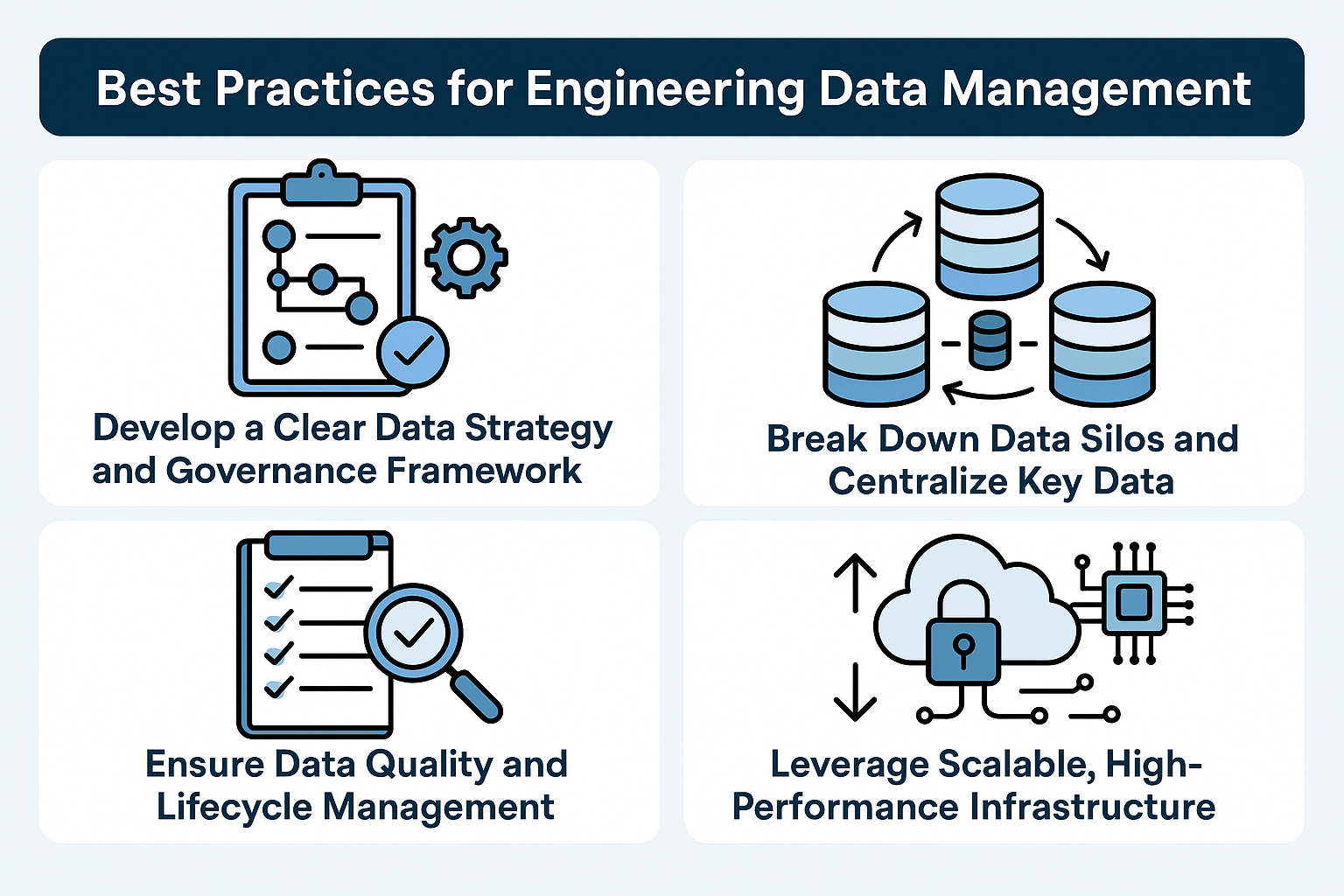

Best Practices for Engineering Data Management

Implementing engineering data management effectively requires a strategic approach. Below are several best practices that industry leaders – including Sphereinc – recommend to streamline data workflows and maximize value. These practices balance technical rigor with business considerations, ensuring that data management efforts support organizational goals and are sustainable in the long run:

1. Develop a Clear Data Strategy and Governance Framework

Every initiative should start with a plan. A formal data strategy aligns data management with business objectives – identifying what data is most important, how it will be used, and what success looks like. Equally crucial is data governance: the policies, standards, and roles that ensure data is handled properly (who can create or edit data, how it’s validated, etc.). Sphereinc’s data strategy consulting services take a deep look “under the hood” of current data practices, including policy and standards development. The goal is to design a governance framework tailored to the organization’s structure and needs, promoting effective data management practices throughout the company

Concretely, this means establishing data owners/stewards, defining data quality standards, and setting up governance bodies or committees that oversee major data-related decisions. When governance is in place, data governance becomes an enabler of trust and reliability rather than a bureaucratic hurdle. Leaders should also secure executive sponsorship for the data strategy – C-level support ensures that initiatives get the resources and cultural emphasis needed. Ultimately, a clear strategy and governance model provide the foundation upon which all other practices build.

2. Break Down Data Silos and Centralize Key Data (Single Source of Truth)

One of the biggest hurdles in engineering organizations is fragmented data – design files on individual laptops, test results in departmental spreadsheets, maintenance records in a legacy system that doesn’t talk to anything else. A best practice is to consolidate and integrate these data sources into a unified platform or architecture. This doesn’t mean one monolithic database for everything, but rather a well-architected ecosystem where data flows freely to where it’s needed. For instance, product design data (CAD models, BOMs, drawings) might be managed in a Product Lifecycle Management (PLM) or Product Data Management system, while sensor data streams into a data lake – but these can be linked or indexed so that related information is accessible together. Organizing design data is especially vital: without a structured system, engineers often deal with version confusion and spend time searching for files. A lack of organized design data – e.g. CAD files scattered on drives or cloud folders – leads to inadvertent use of outdated versions, errors, and rework. Adopting a central repository or PLM for design documents, with proper version control, solves this by ensuring everyone works off the latest approved data. Similarly, consolidating part catalogs and project data (rather than having “tons of Excels” in different places) eliminates inconsistencies.

Companies should aim for a “single source of truth” for each major data domain (product data, project data, operational data), or implement a data federation mechanism that makes disparate sources searchable in one interface. This significantly improves efficiency and data consistency. Modern data integration techniques like enterprise service buses, APIs, or data virtualization can help tie systems together. Sphere typically begins engagements with a thorough data architecture assessment (data aggregation, storage, and flow analysis) to identify silos and integration opportunities, ensuring that the final architecture provides unified access to data across the engineering value chain.

3. Ensure Data Quality and Lifecycle Management

Quality is paramount – “garbage in, garbage out” holds very true in engineering. Best practices here include implementing robust ETL (Extract-Transform-Load) pipelines or data cleaning processes to validate and standardize data as it moves between systems. Raw data from devices or human inputs often contains errors or inconsistencies; without cleaning, these issues propagate and undermine trust. For example, IoT sensor feeds might have outliers or missing readings – automated routines should catch and correct or flag these. Likewise, when importing legacy data into a new system, cleansing and normalization (units, formats, naming conventions) are key. According to a Wakefield Research survey, data professionals still spend an inordinate amount of time (up to 40%) on data quality firefighting, so investing upfront in quality pays off by freeing engineers from manual fixes. It’s also wise to monitor data quality continuously (data profiling, anomaly detection) rather than a one-time effort.

Master data management (MDM) principles can be applied for reference data like part numbers or customer info, to maintain consistency across applications. In tandem with quality, consider data lifecycle management: not all data should live forever in active systems. Define retention policies – what needs to be archived after a project ends, how to handle version histories, when to purge obsolete data. Data versioning and change management practices allow teams to trace the evolution of critical datasets (for example, maintaining a clear revision history of a design or simulation). This provides auditability and supports learning from past projects. Regular data archiving of historical engineering data ensures older information remains available for reference or compliance, but doesn’t clutter day-to-day systems. Effective lifecycle management also includes backup and disaster recovery planning – something often overlooked until a failure happens. Routine backups (with off-site storage or cloud redundancy) and tested restore procedures are non-negotiable best practices to prevent catastrophic data loss.

4. Leverage Scalable, High-Performance Infrastructure (Embrace Cloud and Automation)

Engineering data can be extremely large (think multi-gigabyte CAD assemblies or petabytes of IoT data) and computationally intensive (e.g. running CFD simulations or AI models on data). Trying to manage this on traditional infrastructure can result in slow performance and scalability bottlenecks. A best practice is to design for scale and speed from the outset, which often means leveraging cloud and modern data technologies. Cloud platforms offer virtually unlimited storage and the ability to scale compute resources on-demand for big data processing or analytics bursts. Sphereinc frequently helps clients modernize their data platforms by moving from on-premises databases to cloud data lakes and warehouses, enabling them to harness the power of big data with solutions tailored to manage vast data volumes, while ensuring scalability, performance, and security. The cloud also simplifies global data access and collaboration (with appropriate access controls). In addition to cloud storage, consider distributed computing frameworks (like Apache Spark or Flink) for large-scale data processing, and containerization for deploying data services flexibly.

Infrastructure-as-Code to quickly spin up environments, automated data pipelines that ingest and process data continuously, and DevOps/DataOps practices that reduce manual intervention. An emerging best practice is adopting DataOps, an agile methodology for data management that treats data pipelines similar to software CI/CD – continually testing data flows, monitoring quality, and deploying improvements. Automation tools can also help enforce governance (for example, automatically tagging sensitive data or enforcing data retention rules). The bottom line is that modern engineering data management should not be stuck in legacy mode – it should make use of the best tools of the trade (cloud, big data tech, AI for data management) to handle the scale of today’s data efficiently. This ensures that as your organization grows or projects multiply, the data backbone scales smoothly without performance lags or downtime.

5. Implement Strong Security and Compliance Measures

With great data comes great responsibility. Given the sensitive and proprietary nature of much engineering data, security must be built into every layer of data management. Best practices include role-based access control (RBAC) – ensuring each user or team only accesses the data they need – and robust authentication and authorization systems. Important data should be encrypted at rest and in transit, especially if using cloud services or external collaboration. In regulated industries (e.g. defense, healthcare devices, etc.), compliance frameworks (like ITAR, ISO 27001, or GDPR for personal data in EU) impose specific requirements on data handling; compliance should be treated as a core requirement, not an afterthought. Sphereinc emphasizes governance and security hand-in-hand, for example by establishing clear data policies and access controls to protect sensitive engineering data from unauthorized access or manipulation.

Regular audits and monitoring are a best practice to detect any anomalies or breaches – for instance, maintaining audit logs of who accessed or changed critical design files, and using intrusion detection for your data repositories. Another aspect is data resilience: implementing fail-safes like redundant storage, version backups, and even cyber insurance for worst-case scenarios. The importance of security is underscored by rising threats; as noted earlier, a majority of engineering firms have faced cyber incidents recently, and strengthening cybersecurity protocols (like AI-driven threat detection and zero-trust architectures) is becoming the norm to safeguard engineering data.

6. Foster a Data-Driven Culture and Skillset

Lastly, tools and processes alone won’t succeed without the right people and mindset. Leading organizations invest in building a culture that values data – where decisions at all levels are backed by data analysis, and employees are encouraged to use data to innovate. This cultural shift may involve training engineers and project managers on new data platforms or analysis tools, or hiring data specialists (data engineers, data scientists) to work alongside domain experts. In engineering firms, it’s increasingly common to have dedicated data engineering teams that focus on managing and curating data for the rest of the organization. Ensuring that end-users (engineers, designers) have easy-to-use interfaces to interact with data (such as self-service BI dashboards or intuitive search portals for technical documents) will drive adoption of the data systems put in place.

According to a construction industry study, 60% of respondents said data management and analysis skills are essential for teams to work effectively – underscoring the need for talent development in this area. Sphereinc often provides not just the technical solution but also training and change management support, so that client teams can fully embrace new data workflows. Encourage champions within teams to demonstrate quick wins – for example, an engineer automating a previously manual data gathering task and saving hours each week – to build momentum. Over time, a data-driven culture will sustain and amplify the benefits of your engineering data management investments, as people continually find new ways to leverage data for better outcomes.

By following these best practices – from high-level governance down to daily usage habits – organizations can significantly streamline their engineering data management. The journey may require effort and change, but the reward is a more agile, efficient, and intelligent operation. As Sphere Partners often advises clients: treat your data as a strategic asset, and manage it with the same rigor as you would manage your finances or human capital. Doing so sets the stage for the next section, where we consider the tools and software solutions that can bring these best practices to life.

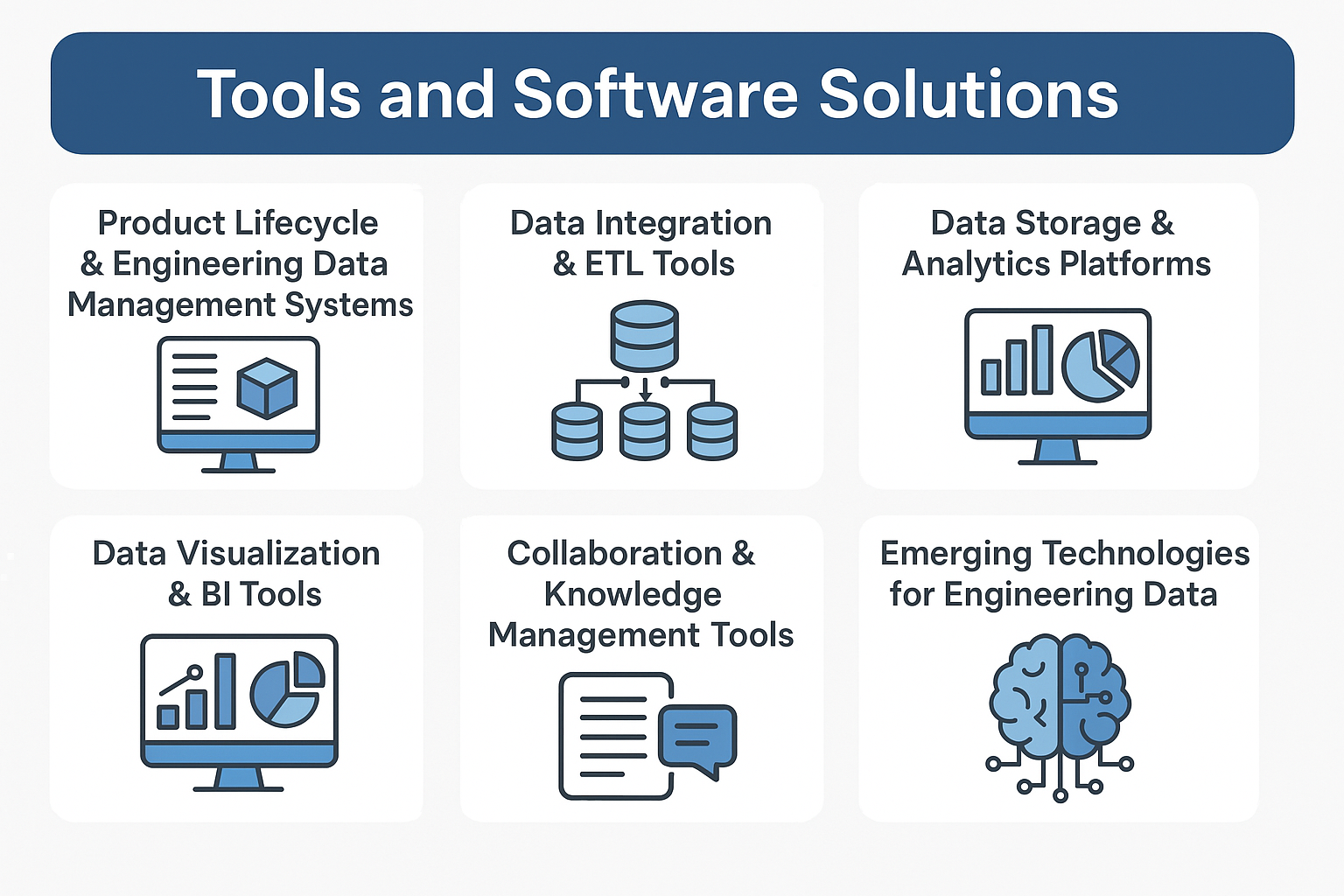

Tools and Software Solutions for Your Data

Implementing engineering data management at scale typically involves a suite of tools and software solutions. These range from specialized engineering systems to enterprise data platforms and emerging technologies. The right toolset can vastly improve how data is captured, stored, and utilized. Below, we outline key categories of tools and solutions, along with examples and how they fit into a streamlined data workflow:

Product Lifecycle Management and Engineering Data Management Systems

For design and product data, PLM or PDM systems are foundational. They act as the central repository for all product-related information – CAD models, drawings, specifications, Bill of Materials (BOMs), simulation files, and revision histories. Top PLM solutions (e.g., Siemens Teamcenter, PTC Windchill, Dassault Systèmes ENOVIA) provide engineers with version control, access control, and collaboration features tailored to engineering workflows. By using PLM, companies ensure that design data is organized and accessible, which addresses the earlier pain point of scattered CAD files and Excel BOMs.

Modern cloud-based PLM/PDM tools (including some SaaS solutions) offer easier deployment and remote access. These systems often integrate with CAD software directly, so that when an engineer saves a design, it’s automatically vaulted with metadata, notifications can alert stakeholders of changes, etc. PLM solutions also enforce workflows (like approval processes for design releases) and maintain an audit trail – valuable for both quality control and compliance. For smaller organizations or specific needs, there are lighter-weight engineering data management tools focusing on drawing management or requirements management, but the concept is similar: establish a single source of truth for engineering documents. Sphereinc has experience implementing and customizing such systems, ensuring they align with each client’s processes (for example, integrating PLM with ERP systems so that engineering changes flow into procurement and manufacturing seamlessly).

Data Integration and ETL Tools

Given that engineering data comes from many sources (PLM, ERP, IoT platforms, test databases, etc.), tools that integrate data and transform it for analysis are crucial. Enterprise Application Integration (EAI) platforms or Enterprise Service Buses (ESBs) can connect different software so that, say, a change in a design triggers an update in the manufacturing system. Extract-Transform-Load (ETL) and ELT tools (like Informatica, Talend, Microsoft SQL Server Integration Services, or modern cloud-native tools like Fivetran and Azure Data Factory) allow organizations to pull data from various systems into a central repository or data warehouse. In an engineering context, you might use ETL to gather data from a PLM, a maintenance management system, and an IoT sensor feed, and then combine them to analyze, for example, how a design change affected field failure rates.

Data pipelines can also be built with programming for custom needs, but using established tools accelerates development and provides scalability. A newer trend is streaming data integration using platforms like Apache Kafka, which Sphere has utilized in cases where real-time data (machine sensors, telemetry) needs to be integrated continuously rather than in batch. These integration tools often come with scheduling, error handling, and monitoring capabilities – aligning with best practices to automate data flows and ensure data quality (with features to cleanse or reject bad data). By deploying robust integration pipelines, organizations break down silos and have the data readily available in the right form for analysis or reporting.

Data Storage and Analytics Platforms

Once integrated, data needs to be stored in a manner suitable for analysis. This is where data warehouses, data lakes, and lakehouse architectures come in. Data warehouses like Snowflake, Amazon Redshift, Google BigQuery, Azure Synapse store structured data in a schema optimized for querying (often SQL-based analytics). They are great for structured data from business systems or summarized sensor data.

Data lakes, usually cloud storage like AWS S3, Azure Data Lake Gen2, or Hadoop HDFS, can store raw, unstructured, or semi-structured data at scale – ideal for things like raw IoT logs, big simulation outputs, or documents – basically storing vast volumes of data cheaply and scalably. Many companies adopt a hybrid “lakehouse” approach that combines a data lake with warehouse-like query layers (Databricks on Delta Lake is one popular example) to allow analysis on both structured and unstructured data. Sphere often guides clients on selecting the right platform or combination: for instance, leveraging cloud data lakes for their scalability and then layering analytics engines for performance.

On top of storage, analytics engines or query tools are needed: frameworks like Apache Spark enable big data processing (e.g. running algorithms on your entire data lake). There are also domain-specific analytics tools – for engineering, one might use IIoT analytics platforms (like GE Predix or PTC ThingWorx) for equipment data, or specialized simulation data management tools for CAE results. However, increasingly we see convergence where general analytics platforms can handle engineering data with the right data science effort.

AI and machine learning platforms deserve mention here too: tools like TensorFlow, PyTorch or autoML services can be used to develop predictive models (for example, predictive maintenance or yield optimization models) once the data is available. Companies that invest in data science are essentially adding an intelligence layer on top of their managed data, discovering patterns and predictions that give them a competitive edge.

Data Visualization and BI Tools

Presenting data in an understandable way is vital for decision-makers. Business Intelligence tools such as Tableau, Microsoft Power BI, or Looker can be used to create dashboards and reports for engineering data. For instance, a dashboard might display live metrics from manufacturing lines (throughput, temperature, vibration readings), quality KPIs, or project status indicators pulling from multiple systems.

By visualizing complex engineering data, these tools help surface insights and allow interactive exploration (drilling down into data by product line, by region, etc.). They are also useful for summarizing data for executives – e.g. a CDO or CTO might monitor data quality statistics or the ROI from data initiatives via a management dashboard. Another class of tools includes more technical visualization software often used by engineers for specific needs – think of CAE (computer-aided engineering) post-processing tools that visualize simulation data or IoT platforms that have their own UI for device data.

The key is to make data consumable to the people who need to act on it. A fancy data lake is of little use if engineers and managers cannot easily query it or get answers. Sphereinc ensures that as data pipelines are built, the end-user interface is also addressed, whether it’s custom web portals, integrated dashboards, or mobile access for on-site personnel. Self-service analytics (allowing engineers to do some querying or analysis without always relying on IT) is encouraged, with proper governance guardrails.

Collaboration and Knowledge Management Tools

Engineering data often intersects with broader knowledge management. Tools like Confluence, SharePoint, or specialized engineering wikis and requirements management tools help capture the context around data – meeting notes, design rationales, change discussions, etc. These aren’t data management per se, but when linked with actual data systems, they create a richer picture. For example, linking a requirement in a tool to the test results data that verifies it, or linking a design review note to the CAD model revision. Collaboration platforms that provide real-time sharing of data (for example, cloud CAD platforms where multiple engineers can co-edit or comment on a design) also contribute to streamlined data workflows. In recent times, integration between PLM and project management tools (like Jira or Asana for tracking tasks) helps tie data to action items. Sphereinc often helps companies integrate their data platforms with these collaboration tools – a common request from decision-makers is a unified view of project status that includes data-driven metrics alongside schedule or task information.

Emerging Technologies for Engineering Data

Some notable emerging solutions include: Digital Twin platforms (which create a live data-driven virtual model of a physical asset or system – useful in industries like aerospace, manufacturing, and energy to monitor and simulate performance in real-time); Knowledge graphs and data catalogs (which help in finding and relating data across silos by using metadata and semantic links – for example, automatically knowing which test reports relate to which design and which field asset); and AI-powered data management (like using AI to automatically classify engineering documents, or detect anomalies in data streams). Even blockchain is being explored to ensure authenticity of engineering data and streamline supply chain data exchange with tamper-proof records.

In summary, a modern engineering enterprise might have a PLM for design data, a data lake/warehouse for analytical data, integration tools gluing systems together, analytics tools to derive insights, and collaboration tools to act on those insights. Underpinning all this, of course, are the governance and security mechanisms discussed earlier. With the right ensemble of tools, companies can automate formerly manual tasks, achieve real-time visibility into operations, and empower their engineers and executives alike with reliable data at their fingertips. This set-up is the engine that drives streamlined workflows every day.

Case Studies on Engineering Data Management

To illustrate the impact of streamlined engineering data management, let’s explore a few real-world examples and scenarios. These case studies demonstrate how applying the right strategies and tools can deliver tangible business results.

Case Study 1: Enhancing Quality Control with Computer Vision

A manufacturer faced frequent quality issues due to manual defect inspections. Sphere Partners introduced a real-time computer vision system that quickly detects defects directly on the production line, improving product quality and reducing waste.

Quick Insight: Computer vision can quickly boost efficiency—start small to prove value before broader implementation.

Case Study 2: Smart Building Optimization Using IoT

A facility management company needed better oversight of building operations to save energy and improve comfort. Sphere integrated IoT sensors and analytics, enabling real-time monitoring and proactive maintenance, significantly cutting energy costs and improving occupant satisfaction.

Quick Insight: IoT solutions don’t need to be complex—clear dashboards with actionable insights often yield the fastest improvements.

Case Study 3: Streamlining Production with Robotic Process Automation (RPA)

A consumer electronics firm struggled with repetitive manual tasks causing productivity losses. Sphere implemented RPA to automate routine activities such as data entry and quality checks. This reduced errors and boosted overall operational efficiency.

Quick Insight: Automating repetitive tasks first quickly demonstrates value and paves the way for broader adoption.

These case studies highlight a common theme: when engineering data management is streamlined, organizations see concrete improvements in efficiency, reliability, and business outcomes. Whether it’s faster product development cycles, reduced downtime, or better use of knowledge, the impact is measurable. They also show that the journey involves a blend of strategy (governance, culture) and technology (tools, integration), exactly as discussed in earlier sections.

Sphere has positioned itself as a partner in achieving these outcomes. By bringing expertise in data strategy, data architecture, and implementation of cutting-edge solutions, we help decision-makers realize the full potential of their engineering data. In the end, success is when data ceases to be a bottleneck or an afterthought, and instead becomes a force multiplier for engineering and business innovation. The examples above serve as inspiration and proof that with the right approach, engineering data can be transformed from a headache into a strategic advantage – something every CEO, CTO, and CIO is striving for in the modern era.

Ready to transform your approach? Just contact us!