With the need to compete in the AI race, businesses find themselves at a crossroads, facing difficult decisions. On one side, sophisticated language processing has proven its value in real-world scenarios. On the other side, using advanced technologies often comes with heavy computational costs. Large Language Models, while powerful, typically come with significant overhead, making them inaccessible for many organizations. This is where Small Language Models step in, offering a cost-effective, efficient alternative that doesn’t compromise on performance.

What is a Small Language Model?

A Small Language Model is a type of artificial intelligence designed to perform natural language processing tasks using a significantly smaller number of parameters compared to larger models. A model is generally considered small if it has fewer than 7 billion parameters.

Unlike Large Language Models, these models are characterized by their compact architecture and lower computational demands. SLMs are trained on smaller, more curated datasets that are highly relevant to specific tasks, allowing them to perform with a high degree of accuracy and efficiency. This specialization makes SLMs particularly effective for applications that require precise language understanding and generation but do not necessitate the vast knowledge base of a Large Language Model (LLM). Moreover, as Darren Oberst mentioned, “small language models can probably do 80% to 90% of what the ‘mega model’ can do but you’re going to be able to do it at probably 1/100th the cost.”

Importance in the AI Landscape

Recent trends indicate a growing interest in smaller, more efficient models. SLMs play a critical role by offering a sustainable and accessible alternative to larger models. They democratize the use of AI technology by allowing smaller organizations and individual developers to implement sophisticated NLP tasks without the need for extensive resources. This accessibility is transforming various industries, enabling businesses to leverage AI for specific needs without the overhead of managing large-scale models.

As AI continues to integrate into daily operations across sectors, SLMs stand out for their ability to deliver targeted solutions efficiently, making them a vital component in the toolkit of modern AI applications.

Comparative Analysis: SLMs vs. Large Language Models

Overview

The field of artificial intelligence has seen a divergence in the development of language models, with significant distinctions between Small Language Models and Large Language Models. Both types of models serve distinct purposes and exhibit different efficiencies, costs, and application-specific advantages. This section provides a detailed comparison, offering insights into their optimal use cases.

Cost and Resource Efficiency

SLMs offer a distinct advantage in terms of cost-efficiency and resource usage. They are designed to be lean and focused, requiring less computational power and data for training, which significantly reduces their operational costs. For example, training and deploying an SLM may only require standard computing resources, which contrasts sharply with LLMs like GPT-4, which demand robust and expensive GPU clusters for similar tasks. This makes SLMs more accessible to organizations with limited technical infrastructure or those operating under stringent budget constraints.

Performance Specificity and Application Precision

SLMs are tailored to perform exceptionally well in specific tasks or narrow domains. Because they are trained on targeted datasets, they can achieve higher accuracy in their specialized fields compared to LLMs. For instance, an SLM developed for legal document analysis will outperform general LLMs in understanding legal jargon and predicting outcomes based on legal precedents. This specialization ensures that SLMs not only deliver more relevant results but also do so with quicker response times, making them ideal for industries requiring high precision and efficiency.

Scalability and Flexibility

While LLMs are praised for their broad knowledge and versatility, this comes at the cost of flexibility. LLMs often require extensive retraining or fine-tuning for specific tasks, which can be resource-intensive. In contrast, SLMs, with their smaller size and focus, can be quickly adapted and scaled to new requirements or changes within a domain without the need for extensive additional training. This makes SLMs particularly valuable for dynamic industries where rapid adaptation to new data or user needs is crucial.

Implementation and Maintenance

The implementation and ongoing maintenance of SLMs are generally less complex and costly than those of LLMs. SLMs’ simpler architectures and smaller sizes mean that they can be integrated into existing systems with less effort and can run efficiently on less powerful machines or even in edge devices. This also translates into lower maintenance costs, as the systems they run on are less prone to the complexities and vulnerabilities associated with large-scale AI infrastructures.

Elevate Your AI Game with SLMs

Discover the smarter way to harness AI power. Dive into our GenAI Services to see how SLMs can redefine your business strategy.

Technical Insights: How Do SLMs Work?

Architecture and Design

Small Language Models are designed with a focus on minimalism and efficiency. Unlike their larger counterparts, SLMs operate with significantly fewer parameters—typically ranging from a few million to a few hundred million. This streamlined architecture allows SLMs to perform tasks without the extensive computational overhead required by Large Language Models. The core design of SLMs often includes simplified versions of transformer architectures, which are central to most modern AI language processing. These models leverage layers of attention mechanisms that selectively focus on different parts of the input data, which is critical for achieving high performance in specific tasks.

Training Techniques

SLMs are trained using a variety of specialized techniques that enhance their efficiency and task-specific performance:

- Knowledge Distillation: This technique involves training a smaller model (the “student”) to replicate the behavior of a larger, pre-trained model (the “teacher”). By doing so, the student model learns to approximate the complex functionalities of the teacher model but with a fraction of its parameters.

- Pruning: After an initial training phase, unnecessary connections within the neural network (parameters) are removed, which reduces the model’s size without significant loss in accuracy. This makes the model lighter and faster, suitable for deployment on devices with limited processing power.

- Transfer Learning: SLMs often benefit from transfer learning, where a model developed for one task is repurposed and fine-tuned for another related task. This approach allows SLMs to quickly adapt to new domains with minimal additional data and training.

- Data Curation: Ensuring the quality of training data is paramount. SLMs are often trained on highly curated datasets that are specifically tailored to the tasks they are designed to perform. This selective data strategy leads to models that are not only faster but also more accurate within their scope of application.

Deployment Strategies

Deployment of SLMs is geared towards efficiency and flexibility:

- On-Device AI: Due to their small size, SLMs are ideal for on-device deployment, allowing applications to run AI models locally on smartphones, IoT devices, or edge computers. This capability is crucial for applications needing to function in environments with limited or no connectivity.

- Real-Time Processing: The lightweight nature of SLMs enables them to process data in real-time, which is essential for applications such as language translation apps, interactive voice-responsive systems, and mobile personal assistants.

- Modular Integration: SLMs can be easily integrated as modules within larger systems. This modularity allows businesses to deploy AI capabilities selectively, expanding or altering them as needs evolve without major overhauls to the underlying system infrastructure.

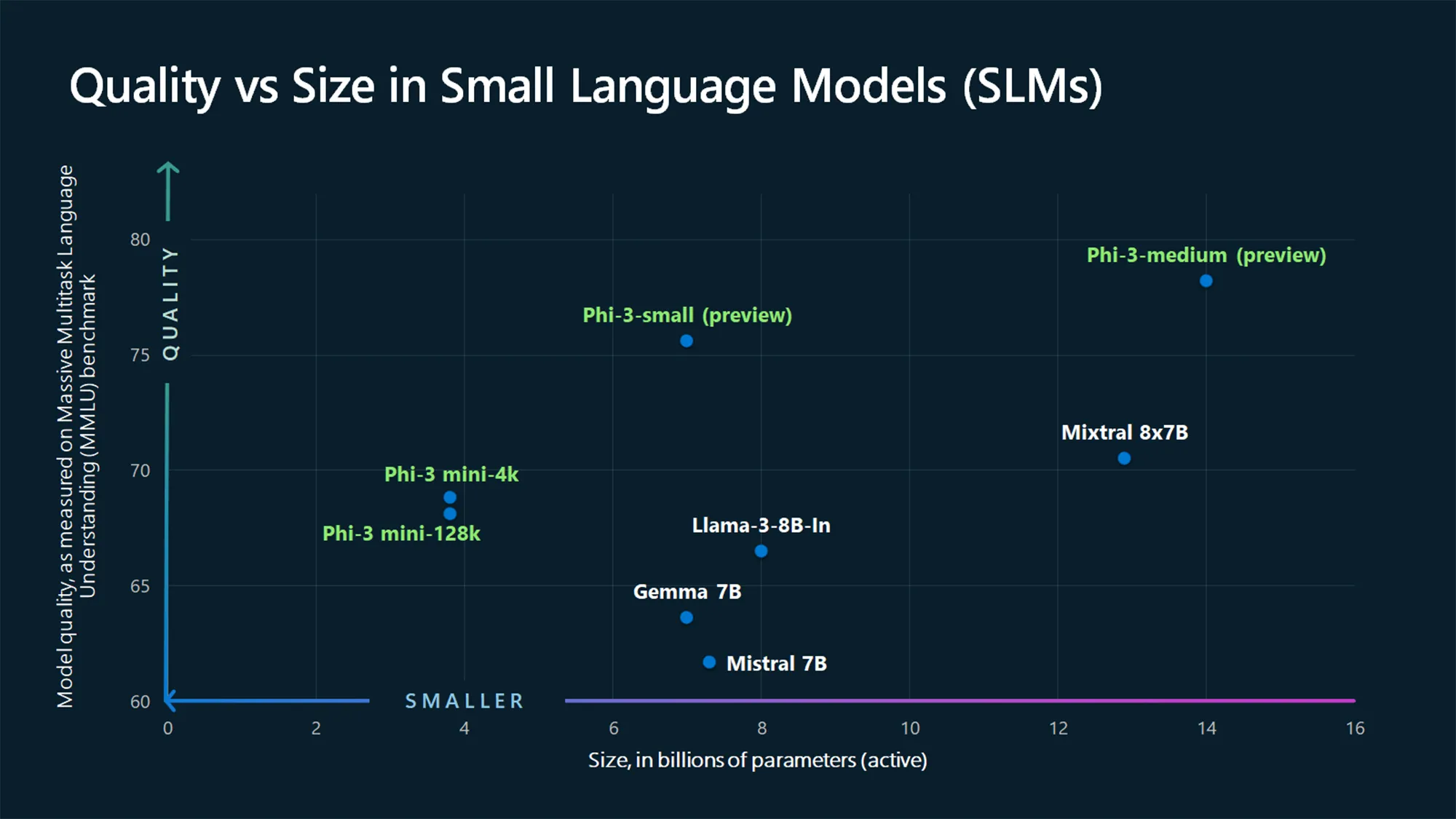

Microsoft Сomparison for Different Language Models

Core Applications of Small Language Models

Small Language Models are being implemented across various industries, showcasing their flexibility and utility. Here are some real-world examples and the technologies that are enabling these applications:

Customer Service Automation

SLMs have revolutionized customer service by enabling the automation of chatbots and virtual assistants that can handle inquiries with high accuracy and personalized responses. For example, a retail company might deploy an SLM to manage customer queries about product availability, order status, and return policies. This application of SLMs not only enhances customer satisfaction by providing quick and accurate responses but also reduces the workload on human agents, allowing them to focus on more complex customer service issues. A notable case is that of a telecom company using an SLM-powered assistant to resolve common technical issues, achieving a 40% decrease in call volume to human agents.

Example: SLMs powers the customer service chatbots. These models handle routine inquiries such as FAQs about products, troubleshooting steps, or account management issues, allowing human agents to focus on more complex customer needs. A notable implementation is the use of SLMs by online retail giants to manage high volumes of customer interactions during peak sales periods.

Technology: Chatbot frameworks like Google’s Dialogflow and Microsoft Bot Framework often integrate SLMs to provide efficient and scalable customer support solutions. These platforms use NLP powered by SLMs to interpret user queries and deliver coherent, contextually relevant responses.

On-Device AI and Edge Computing

SLMs are ideal for on-device AI applications due to their compact size and efficiency. They enable powerful AI functionalities directly on users’ devices, such as smartphones, IoT devices, or even on edge servers in remote locations. For instance, a security system equipped with SLMs can process and analyze video feeds locally to detect unusual activities without the need to send data to the cloud, ensuring privacy and speed. Another example is smart wearables that use SLMs to provide real-time health monitoring and advice, operating independently of continuous cloud connectivity.

Example: SLMs for voice-activated commands and local data processing. For instance, voice assistants on smartphones that can operate offline are typically powered by SLMs, which perform tasks such as setting reminders, answering questions, and controlling other connected devices without needing to connect to the cloud.

Technology: TensorFlow Lite and PyTorch Mobile are examples of frameworks that facilitate the deployment of SLMs on mobile and edge devices. These tools optimize AI models to run efficiently in environments with limited computational resources.

Real-Time Language Translation

SLMs facilitate real-time language translation, which is critical in global communication and content accessibility. These models can be integrated into mobile apps or web platforms to provide instant translation services. For instance, a travel app might use an SLM to help users navigate foreign languages in real-time by translating signs, menus, or spoken directions. The efficiency of SLMs ensures that the translation process is fast enough to be used in live conversation scenarios, enhancing the user’s experience and communication capabilities in diverse linguistic environments.

Example: Instant translation apps on smartphones and web platforms utilize SLMs to provide real-time translation between languages. This technology is crucial for travelers or professionals in multilingual meetings, allowing for seamless communication across language barriers.

Technology: OpenNMT and MarianNMT are open-source neural machine translation frameworks that support the deployment of SLMs for fast and accurate translation services directly on users’ devices.

Healthcare

In healthcare, SLMs are used to automate the processing of clinical documentation and facilitate patient communication. A specific example includes a healthcare provider using an SLM to interpret and summarize patient records, extracting essential information quickly to aid in diagnosis and treatment planning. Another application is in patient interaction platforms, where SLMs provide responses to common health inquiries, schedule appointments, and send reminders for medication, significantly improving patient engagement and care efficiency.

Example: SLMs are used in medical applications for parsing and summarizing patient records, helping healthcare providers quickly extract relevant information without sifting through extensive documentation manually.

Technology: Tools like Hugging Face’s Transformers library provide pre-trained SLMs that can be fine-tuned for specific tasks such as medical document summarization or patient interaction analysis.

IT Services

In the IT sector, SLMs are instrumental in automating routine tasks such as system monitoring, issue ticketing, and user support. An IT service provider might use an SLM to analyze system logs and automatically initiate troubleshooting processes for common problems. Additionally, SLMs can assist in the creation and categorization of support tickets, enabling human agents to prioritize and address more critical issues swiftly.

Example: SLMs automate the monitoring of network traffic to detect anomalies or potential security threats, significantly reducing response times to incidents.

Technology: Elasticsearch and its machine learning modules can integrate SLMs to analyze large streams of log data, providing insights and alerts in real time.

“At Sphere, we believe that the future of AI lies in precision and efficiency. Small Language Models represent a solution, allowing us to deliver highly specialized, cost-effective AI solutions for specific tasks. By embracing these models, we’re not just keeping pace with innovation—we’re setting the standard for what’s possible in AI-driven applications.”

– Leon Ginsburg, CEO

Challenges and Limitations of Small Language Models

Scope of Functionality

While SLMs excel in specific tasks due to their tailored design, this specialization also limits their versatility. Unlike Large Language Models that can generalize across a broad spectrum of tasks due to their vast parameter space, SLMs might struggle with tasks outside their training scope. This restricted functionality can hinder their applicability in scenarios that require a broader understanding or adaptability beyond their configured datasets.

Data and Training Constraints

SLMs are often constrained by the quality and quantity of data available for training. Since they are designed to operate efficiently with fewer resources, the data used must be highly relevant and well-curated, which can be a significant limitation, especially for less-resourced languages or specialized fields. Inaccuracies in data or biases can significantly affect the performance of SLMs, making them less reliable in critical applications.

Evolving Needs and Scalability Issues

The rapid evolution of technology and user expectations poses a challenge for SLMs, as they need constant updates and retraining to stay relevant. Additionally, scaling SLMs to accommodate growing or changing requirements without compromising performance requires careful management of their architecture and capabilities. Balancing efficiency with scalability often necessitates trade-offs in speed, accuracy, or both.

Future Trends and Developments in Small Language Models

Innovations on the Horizon

Advancements in AI research are continuously shaping the development of SLMs. Innovations such as more advanced model compression techniques, better training algorithms, and novel neural network architectures are expected to enhance the efficiency and effectiveness of SLMs. These developments will likely expand the capabilities of SLMs, allowing them to process more complex queries and interact more naturally with users.

Expanding Capabilities and Applications

As SLMs become more advanced, their applications are expected to broaden significantly. Future SLMs could be deployed in more dynamic environments and for tasks that currently require human-like understanding and responses. The integration of SLMs into consumer electronics, complex enterprise systems, and even in emerging fields like telemedicine and autonomous navigation is anticipated to grow, showcasing their expanding utility.

The Role of SLMs in Democratizing AI

SLMs play a crucial role in democratizing AI by making powerful technology accessible to smaller organizations and developers without the need for extensive infrastructure. This accessibility encourages innovation and levels the playing field, allowing startups and smaller tech companies to compete with larger entities. As SLMs continue to evolve, their impact on making AI ubiquitous and beneficial across various sectors of society is expected to be significant, fostering a more inclusive digital future.

Conclusion

Small Language Models have emerged as a pivotal development in the field of artificial intelligence, tailored to perform efficiently within the constraints of limited computational resources. They excel in specific tasks due to their focused training on curated datasets, enabling them to deliver precise and rapid responses.

Despite their smaller scale compared to Large Language Models, SLMs have demonstrated remarkable capabilities across various applications, from customer service automation and on-device AI to real-time language translation and industry-specific solutions.

Potential Impact on Various Sectors

The deployment of SLMs is poised to significantly impact multiple sectors by enhancing operational efficiencies and reducing costs. In healthcare, SLMs can expedite diagnostic processes and patient communication. In retail, they improve customer service by handling routine inquiries swiftly, allowing human agents to tackle more complex issues.

The technology also has promising applications in sectors like finance, where it can streamline transactions and enhance security measures. As SLMs continue to evolve, their integration into industries such as manufacturing and logistics is expected to revolutionize these fields by optimizing supply chains and predictive maintenance strategies.

Final Thoughts on the Advancement of SLMs

The advancement of Small Language Models represents a significant stride towards making AI more accessible and sustainable. Innovations in model training and architecture are expected to further enhance the capabilities of SLMs, broadening their applicability and efficiency. As research continues to push the boundaries of what these compact models can achieve, the role of SLMs in democratizing AI technology becomes increasingly vital.

Looking forward, the continued refinement and adoption of SLMs are likely to catalyze a new wave of technological innovation, making advanced AI tools available to a broader audience and fostering a more inclusive digital future.

Ready to take the next step? Schedule a consultation with Sphere today to explore how our AI solutions can transform your operations and give you a competitive edge. Let’s use the power of SLMs together.